Question

There is an m x n matrix that is initialized to all 0‘s. There is also a 2D array indices where each indices[i] = [ri, ci] represents a 0-indexed location to perform some increment operations on the matrix.

For each location indices[i], do both of the following:

- Increment all the cells on row

ri. - Increment all the cells on column

ci.

Given m, n, and indices, return the number of odd-valued cells in the matrix after applying the increment to all locations in indices.

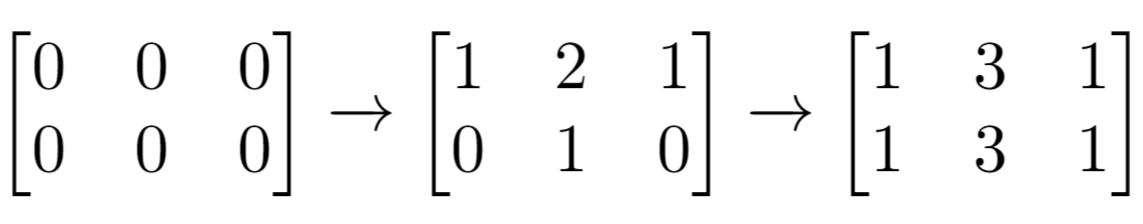

Example 1:

Input: m = 2, n = 3, indices = [[0,1],[1,1]] Output: 6 Explanation: Initial matrix = [[0,0,0],[0,0,0]]. After applying first increment it becomes [[1,2,1],[0,1,0]]. The final matrix is [[1,3,1],[1,3,1]], which contains 6 odd numbers.

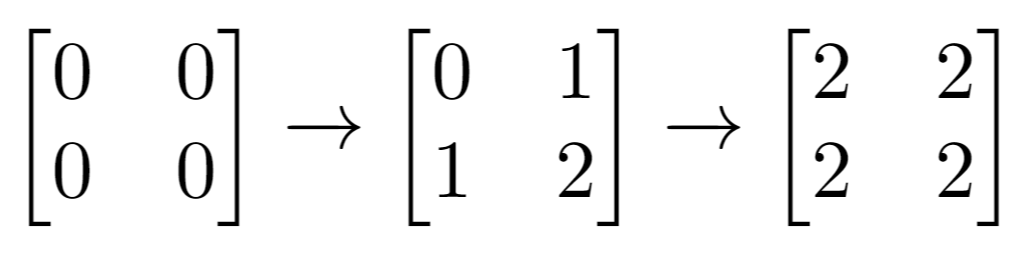

Example 2:

Input: m = 2, n = 2, indices = [[1,1],[0,0]] Output: 0 Explanation: Final matrix = [[2,2],[2,2]]. There are no odd numbers in the final matrix.

Constraints:

1 <= m, n <= 501 <= indices.length <= 1000 <= ri < m0 <= ci < n

Follow up: Could you solve this in O(n + m + indices.length) time with only O(n + m) extra space?

Python Solution

class Solution:

def oddCells(self, n: int, m: int, indices: List[List[int]]) -> int:

rows = [0]*n

cols = [0]*m

for i,j in indices:

rows[i] = rows[i]^1

cols[j] = cols[j]^1

c=0

for i in range(len(rows)):

for j in range(len(cols)):

if rows[i]^cols[j]==1:

c+=1

return c

![[Solved] You are given an integer n and an integer start. Define an array nums where nums[i] = start + 2 * i (0-indexed) and n == nums.length. Return the bitwise XOR of all elements of nums.](https://machinelearningprojects.net/wp-content/uploads/2022/09/Leetcode-solutions-MLP-Feature-Image-1024x536.webp)